Getting started with reproducibility in research!

Enrico GlereanOften researchers ask themselves: "Am I able to re-run the same process/analysis and re-obtain the same results that I have published?"

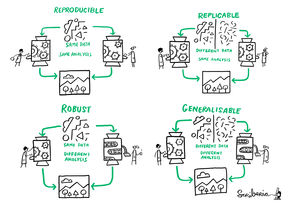

Reproducibility, replicability, reusability, ... multiple names for a similar issue: are we able to reproduce the same results that others have published? Or more selfishly: am I able to re-run the same process/analysis and re-obtain the same results that I have published? This applies both to quantitative as well as qualitative research: Is the whole research process described so clearly that somebody else with the same tools would draw the same conclusions?

In some cases the issues with reproducibility are fixable by, for example, documenting all the steps, automating some processes to remove human error, stop using old methods that have been shown to be biased and produce unreliable findings. In some other cases the reproducibility issues might be deeper and not so easy to fix: Are we measuring what we think we measure? Can we actually conduct reproducible research on a certain topic?

Reproducibility is one of the core elements of the European Code of Conduct for Research Integrity, however it has been neglected too many times because the current system create incentives for publishing a paper, and not for what might happen after the paper is published. I might be able to publish some lucky results, but that does not mean that others will be able to replicate my findings. If on top of that you add sloppy project management, lack of clarity about protocols/methods/code used, data secrecy, then we are few steps away from falsification and research misconduct.

Step 1. Be aware of the reproducibility crisis

First: get a picture of the problem. There are great papers like "Why Most Published Research Findings Are False" (Ioannidis 2005) or "A manifesto for reproducible science" (Munafo et al. 2017), there are even journal clubs (recently the Finnish Reproducibility Network run a twitter journal club with a collection of 24 articles on reproducibility). At Aalto there is a ReproducibiliTEA journal club ongoing right now (Spring 2022, by Jan Vornhagen). In every research field somebody has written a paper about reproducibility. If you have limited time, check one of our past presentations and videos on the topic:

- Making your research reproducible (by Mika Jalava) [Slides]

- How to make your code reusable 12.11.2020 (by Enrico Glerean) [Video]

- Responsible conduct of research: reproducibility, replicability, and questionable research practices 28.04.2021 (by Enrico Glerean) [Video]

Step 2. Identify your reproducibility goals

Do you aim for having a fully reproducible pipeline that with a press of a button you can re-do all your analysis and (re)produce same figures/numbers? Or are you happy that in 6 months from today your future self knows exactly what to re-run and is thanking you for writing things down clearly? You might be working with other people, so it is good to also consider the "winning-the-lottery" scenario: you won a lottery and you decide to immediately move to a tropical island leaving all your work colleagues behind. Nobody can call you or ask you anything. Would one of your colleagues be able to check your folders and files and reproduce what you did?

Step 3. Identify reproducibility issues in your work

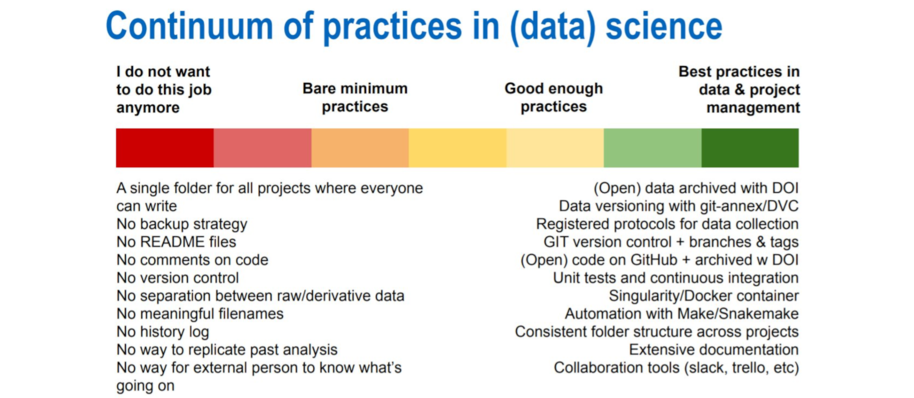

Everyone is obsessed with state of the art or best practices. Yes, reaching perfection is a noble goal, but do we need 100% perfection? "Good enough practices", or even "barely sufficient practices" can still be useful because they help us identify what we can already improve in our project management / data management / methods management workflows, and act accordingly.

Do not feel overwhelmed! You do not need to pick up all the best practices tomorrow, pick one and make it a habit. Then add one more...

4. Pick something to fix in your workflow

Now that you decided what to improve, you can work on it. Do not feel that you are on your own. You can come and talk with experts from Aalto Open Science team and Aalto Scientific Computing team. We can help you in making your data open, your methods reproducible, your code open, organizing your files, documenting your research process, version control, containers, automated pipelines... the list goes on and on. Just pick one and fix that. Not sure? Start a conversation with us at researchdata@aalto.fi or join our daily zoom hour to discuss live https://scicomp.aalto.fi/help/garage/.

5. Go back to point 3 and repeat

Repeat until you are satisfied. Repeat until your future self is so happy that you have left all the instructions on how to reproduce past results. Repeat until you see others re-using your data and methods, reproducing your results and building on top of them!

6. Success! You are now creating reproducible research!

It is great that you took the effort to improve your workflows and now your research is reproducible.

There are phenomena that are not easy to be reproduced. They might require lots of statistical power to be detected because their effect size is too small. It is challenging to work with these topics, but a transparent approach can come to save us.

If you have an hypothesis, you can actually pre-register it before collecting the data and analyzing them. Others can review your hypothesis (e.g. with registered reports) and give you feedback before you engage in this difficult challenge. By having your research process open from the "idea stage" to its final implementation, you can transparently show that you did not use questionable methods to obtain something that is statistically significance but irreproducible. Furthermore, this also solves the file-drawer issue: if you are transparent with what you are planning to do and you have others agreeing with your plan, a negative finding is a finding that is important to be published so that others do not try to repeat the same study.

In these difficult cases, consider also multi-lab studies where similar data/methods are collected/run by multiple labs. You will remove potential bias that you might have in your environment, and produce solid science in a large scale projects with people from around the world interested in the same topic as you.

If you want to be more involved in raising awareness on these issues, promoting activities related to reproducibility, and in general discuss with others about how we can make research more reproducible, we welcome you to the Finnish Reproducibility Network https://www.finnish-rn.org/. Enrico Glerean at Aalto is one of the founding members of the network and is happy to discuss how to join it. The Finnish Reproducibility network welcomes especially local nodes, individuals or research groups who are interested in these topics and would like to be more active. Unsure? Please contact enrico.glerean@aalto.fi or join our daily zoom hour to discuss live https://scicomp.aalto.fi/help/garage/.