A new study shows that reinforcement learning from human feedback (RLHF) , a common way to train artificial intelligence (AI) models, gets a boost when human users have an interactive dashboard to give feedback during training. This approach led to better outputs of the AI model and faster training. The study, recently published in the journal Computer Graphics Forum, also produced open-source code for improving RLHF.

Aligning human preferences with AI behavior is important for making AI tools more useful. One way to do this is with reinforcement learning, where users can, for example, compare two AI outputs side by side, rewarding one and punishing the other to guide the training of an AI system towards desired outputs. Gathering human feedback this way is not only slow, it also doesn’t give users the full picture of possible outputs or an idea of what end goal they should aim for with their feedback, says Antti Oulasvirta, professor at Aalto University and the Finnish Center for Artificial Intelligence FCAI.

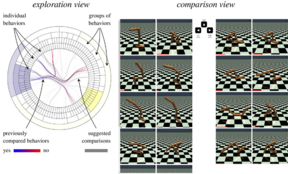

The team of researchers from Aalto, the University of Trento and KTH Royal Institute of Technology therefore created a way to augment traditional reinforcement learning. “Since humans have a great ability to compare and explore data visually, we developed an interactive visualizer that shows all the possible AI behaviors, in this case of a simple robotics simulation,” explains doctoral researcher Jan Kompatscher. In the study, people were asked to make comparisons of the positions of on-screen robotic skeletons that were learning certain postures and movements, like walking or doing backflips.

With the visualizer, test subjects training the AI model were no longer confined to a side-by-side comparison of two items at a time. They could interactively explore comparisons they had already made, see suggested new comparisons, and access the entire catalog of possible simulated movements and positions. Test subjects reported that the training process with the visualizer felt more efficient and useful but was not as easy to use as the more familiar comparison method. The simulated skeletons also performed up to 60% better when trained by users with the interactive visualizer, compared to just side-by-side comparisons, though the number of test subjects in this study was limited.

“Giving people full control lets them express preferences over groups of behaviors, making the process more efficient,” says Kompatscher. “In the same amount of time for the conventional comparison process, test subjects were able to give more informative feedback, leading to better learning for the model.”

“We believe that harnessing humans’ cognition and abilities, and giving them agency in the process, leads to better training of AI models,” Oulasvirta concludes.

Publication and contacts:

Kompatscher, J., Shi, D., Varni, G., Weinkauf, T., & Oulasvirta, A. (2025). Interactive groupwise comparison for reinforcement learning from human feedback. Computer Graphics Forum, DOI: 10.1111/cgf.70290