Human–Computer Interaction Guest Talks: Alexandra Ion (Digital Fabrication) and David Lindlbauer (Mixed Reality)

When

Where

Metamaterial Devices

Alexandra Ion

ETH Zurich

Abstract

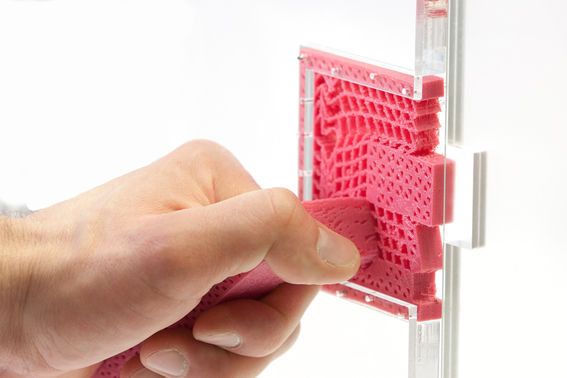

3D printers allow for arranging matter freely in space, thereby enabling the fabrication of arbitrary shapes with complex internal structures. Such structures, typically based on 3D cell grids, are known as metamaterials. Metamaterials have been shown to incorporate extreme properties such as change in volume, programmable shock-absorbing qualities or locally varying elasticity. Traditionally, metamaterials were understood as materials—I think of them as *devices*.

I argue that viewing metamaterials as devices allows us to push their boundaries further. In my research, I propose unifying material and device and develop “metamaterial devices”. To instantiate these new types of devices, I investigated three aspects of such metamaterial devices: (1) materials that process analog inputs, (2) materials that process digital signals, and (3) materials that output information to the user by changing their outside textures.

I believe that future materials will be able to sense their environment, process that information, and produce output or reconfigure themselves—all performed without electronics, but within the material structure itself. Such materials can be fabricated from one block of a single material; thus, they require no assembly and miniaturize well. The intricate cell-structure that enables such materials is very challenging to design as all cells need to move in concert, which demands efficient computational design tools. By solving challenges around design tools, I argue that such intelligent materials will push the boundaries of e.g., medical implants, soft robotics, aeronautics, or energy harvesting materials.

Bio

Alexandra Ion is a postdoctoral researcher at ETH Zurich, working with Prof. Olga Sorkine-Hornung on computational design tools for complex geometry. She completed her PhD with Prof. Patrick Baudisch at the Hasso Plattner Institute in Germany. Alex’s work is published at top-tier HCI venues (ACM CHI & UIST). Her work received a Best paper honourable mention awards at ACM UIST and CHI, and was invited for exhibitions, including a permanent exhibition at the Ars Electronica Center in Austria.

Dynamic Visual Appearance to Bridge the Virtual and Physical World

David Lindlbauer

ETH Zurich

Abstract:

In the virtual world, changing properties of objects such as their color, size or shape is one of the main means of communication. Objects are hidden or revealed when needed, or change their color or size to communicate importance---properties that cannot be changed instantly in the real world. Bringing these richer features to the real world would enable us to access the virtual world in ways beyond flat displays and 2D windows, and embed information into the physical objects by making them dynamic. But this can be challenging to achieve.

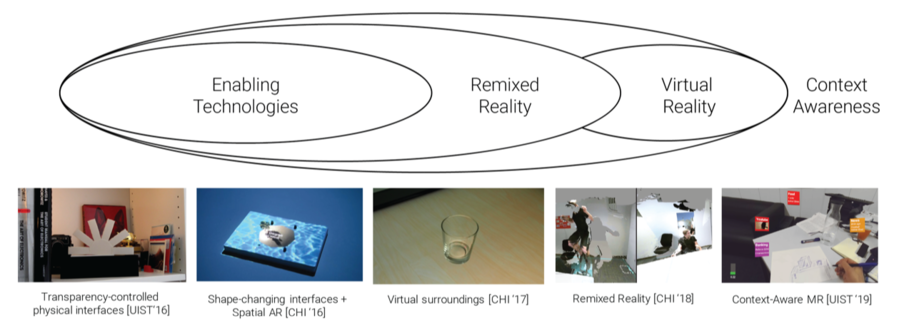

In this talk, I will present three distinct aspects that are important when bridging the gap between the virtual and the real world. First, I will present approaches that enable dynamic visual appearance of real-world objects and bridge the gap between the virtual and physical world: dynamic objects, augmented objects, and augmented surroundings. I will show examples of those enabling technologies, including transparency-controlled physical objects that can communicate with users by changing their perceived shape, and a way to alter the perception of existing physical objects by modifying their surrounding space. Secondly, to experience what a completely controllable physical world would be like, I will present an approach called Remixed Reality, which replaces what users see with rendered geometry. This enables showing virtual contents anytime in any shape or form, and making nearly arbitrary modifications to the environment. Finally, to avoid overloading users with virtual content, I will present an optimization-based approach for Mixed Reality systems to automatically control when and where applications are shown, and how much information they display, based on users' cognitive load, task and environment.

Bio:

David Lindlbauer is a postdoctoral researcher in the field of Human–Computer Interaction, working at ETH Zurich in the Advanced Interaction Technologies Lab, led by Prof. Otmar Hilliges. He holds a PhD from TU Berlin where he was working with Prof. Marc Alexa in the Computer Graphics group, and interned at Microsoft Research in the Perception & Interaction Group. His research focuses on the intersection of the virtual and the physical world, how the two can be blended and how borders between them can be overcome. David created enabling technologies such as physical interfaces with controllable transparency that can hide and appear only when needed, or changed the appearance of real-world objects by combining them with virtual capabilities through projection and displays. He explores approaches that allow users to seamlessly transition between different levels of virtuality, from seeing the pure physical world without augmentation to fully artificial reality, and computational approaches to make such approaches more usable. He has worked on projects to expand and understand the connection between humans and technology, from dynamic haptic interfaces to 3D eye-tracking. His research has been published in premier venues such as ACM CHI and UIST, and attracted media attention in outlets such MIT Technology Review, Fast Company Design, and Shiropen Japan.