Speech Synthesis

Speaking machines have long been a central research interest in speech processing and machine learning. Speech synthesis is a key component in, for example, conversational agents, personal digital assistants, audiobook readers, and assistive devices including screen readers and voice prostheses. Modern speech synthesis methods achieve close to human-level naturalness by using deep generative models, such as WaveNet, GANs, Diffusion models and Transformer language models.

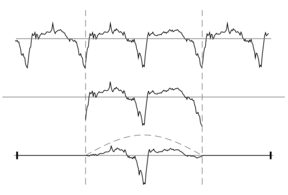

Current technical challenges in speech synthesis include efficiency, control, and interpretability. State-of-the-art relies on large neural network models, which are computationally expensive black-boxes. Aalto Speech Synthesis Group research combines classic digital signal processing methods with differentiable computing for efficient and interpretable neural synthesis.

Instant voice cloning is another trend in speech synthesis technology. A voice cloning system can be adapted to a new voice from just a few seconds of audio, which opens many exciting applications, but also presents a pressing set of challenges for deepfake detection. Building responsible synthesis with watermarking is a current research topic in the Aalto Speech Synthesis Group.

The Aalto Speech Synthesis Group has ongoing collaboration related to the above topics with KTH Royal Institute of Technology, Sweden; and National Institute of Informatics (NII), Japan.

Current research topics

- Generative models for speech synthesis: GANs, WaveNets, diffusion models, discrete representation learning from audio, language models for sound

- Differentiable DSP: digital signal processing as building blocks for efficient neural synthesis systems

- Watermarking generative models; Speech deepfake detection, countermeasures, and awareness

The Speech Synthesis Research Group is led by professor Lauri Juvela